General Introduction

This file documents the XML extension of GNU Awk (gawk).

This extension allows direct processing of XML files with gawk.

Copyright (C) 2000–2002, 2004–2007, 2014, 2017 Free Software Foundation, Inc.

This is Edition 1.2 of XML Processing With gawk,

for the 1.0.4 (or later) version of the

XML extension of the GNU implementation of AWK.

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with the Invariant Sections being “GNU General Public License”, with the Front-Cover Texts being “A GNU Manual”, and with the Back-Cover Texts as in (a) below. A copy of the license is included in the section entitled “GNU Free Documentation License”.

- The FSF’s Back-Cover Text is: “You have the freedom to copy and modify this GNU manual.”

Table of Contents

- Preface

- 1 AWK and XML Concepts

- 2 Reading XML Data with POSIX AWK

- 3 XML Core Language Extensions of gawk

- 4 Some Convenience with the xmllib library

- 5 DOM-like access with the xmltree library

- 6 Problems from the newsgroups comp.text.xml and comp.lang.awk

- 7 Some Advanced Applications

- 7.1 Copying and Modifying with the xmlcopy.awk library script

- 7.2 Reading an RSS news feed

- 7.3 Using a service via SOAP

- 7.4 Loading XML data into PostgreSQL

- 7.5 Converting XML data into tree drawings

- 7.6 Generating a DTD from a sample file

- 7.7 Generating a recursive descent parser from a sample file

- 7.8 A parser for Microsoft Excel’s XML file format

- 8 Reference of XML features

- 8.1 XML features built into the gawk interpreter

- 8.1.1

XMLDECLARATION: integer indicates begin of document - 8.1.2

XMLMODE: integer for switching on XML processing - 8.1.3

XMLSTARTELEM: string holds tag upon entering element - 8.1.4

XMLATTR: array holds attribute names and values - 8.1.5

XMLENDELEM: string holds tag upon leaving element - 8.1.6

XMLCHARDATA: string holds character data - 8.1.7

XMLPROCINST: string holds processing instruction target - 8.1.8

XMLCOMMENT: string holds comment - 8.1.9

XMLSTARTCDATA: integer indicates begin of CDATA - 8.1.10

XMLENDCDATA: integer indicates end of CDATA - 8.1.11

LANG: env variable holds default character encoding - 8.1.12

XMLCHARSET: string holds current character set - 8.1.13

XMLSTARTDOCT: root tag name indicates begin of DTD - 8.1.14

XMLENDDOCT: integer indicates end of DTD - 8.1.15

XMLUNPARSED: string holds unparsed characters - 8.1.16

XMLERROR: string holds textual error description - 8.1.17

XMLROW: integer holds current row of parsed item - 8.1.18

XMLCOL: integer holds current column of parsed item - 8.1.19

XMLLEN: integer holds length of parsed item - 8.1.20

XMLDEPTH: integer holds nesting depth of elements - 8.1.21

XMLPATH: string holds nested tags of parsed elements - 8.1.22

XMLENDDOCUMENT: integer indicates end of XML data - 8.1.23

XMLEVENT: string holds name of event - 8.1.24

XMLNAME: string holds name assigned to XMLEVENT

- 8.1.1

- 8.2

gawk-xmlCore Language Interface Summary - 8.3 xmllib

- 8.4 xmlbase

- 8.5 xmlcopy

- 8.6 xmlsimple

- 8.7 xmltree

- 8.8 xmlwrite

- 8.1 XML features built into the gawk interpreter

- 9 Reference of Books and Links

- Appendix A GNU Free Documentation License

- Index

Next: AWK and XML Concepts, Previous: General Introduction, Up: General Introduction [Contents][Index]

Preface

In June of 2003, I was confronted with some textual configuration

files in XML format and I was scared by the fact that my favorite

tools (grep and awk) turned out to be mostly

useless for extracting information from these files.

It looked as if AWK’s way of processing files line by line

had to be replaced by a node-traversal of tree-like XML data.

For the first implementation of an extended gawk, I chose

the expat library to help me reading XML files.

With a little help from Stefan Tramm I went on selecting features and implemented what is now called XMLgawk over the Christmas Holidays 2003. In June 2004, Manuel Collado joined us and started collecting his comments and proposals for the extension. Manuel also wrote a library for reading XML files into a DOM-like structure.

In Septermber 2004, I wrote the first version of this web page. Andrew Schorr flooded my mailbox with patches and suggestions for changes. His initiative pushed me into starting the SourceForge project. This happened in March 2005 and since then, all software changes go into a CVS source tree at SourceForge (thanks to them for providing this service). Andrew’s urgent need for a production system drove development in early 2005. Significant changes were made:

- Parsing speed was doubled to increase efficiency when reading large data bases.

- Manuel suggested and Andrew implemented some simplifications

in user-visible patterns like

XMLEVENTandXMLNAME. - Andrew encapsulated XMLgawk into a

gawkextension, loadable as a dynamic library at runtime. This also allowed for buildinggawkwithout the XML extension. That’s how the-loption and@loadwere introduced. - Andrew cleaned up the autotool mechanism (

Makefile.ametc.) and found an installation mechanism which allows an easy and collision-free installation in the same directory as Arnold’s GNU Awk. He also made Arnold’sigawkobsolete by implementing the-ioption. April 2005 saw the Alpha release ofxgawk, as a branch ofgawk-3.1.4.

In August 2005, Hirofumi Saito held a presentation at the Lightweight Language Day and Night in Japan. His little slideshow demonstrated the importance of multibyte characters in any modern programming language. Hirofumi Saito also did the localization of our source code for the Japanese language. Kimura Koichi reported and fixed some problems in handling of multibyte characters. He also found ways to get all this running on several flavours of Microsoft Windows.

Meanwhile in Summer 2005, Arnold had released gawk-3.1.5 and

I applied all his 219 patches to our CVS tree over the Christmas

Holidays 2005. Andrew applied some more bug fixes from the GNU mailing

archive and so the current Beta release of xgawk-3.1.5 is

already a bit ahead of Arnold’s gawk-3.1.5.

Jürgen Kahrs

Bremen, Germany

April, 2006

Next: Foreword to Edition 1.2, Up: Preface [Contents][Index]

Foreword to Edition 0.3

In August 2006, Arnold and friends set up a mirror of Arnold’s

source tree as a CVS repository at

Savannah.

It is now much easier for us to understand recent changes in

Arnold’s source tree. We strive to merge all of them immediately

to our source tree. This merge process has been enormously simplified

by a weekly cron job mechanism (implemented by Andrew)

that examines recent activities in Arnold’s tree and sends an

email to our mailing list.

Some more problems and fixes in handling multibyte characters have been reported by our Japanese friends to Arnold and us. For example, Hirofumi Saito and others forwarded patches for the half-width katakana characters in character classes in ShiftJIS locale.

Since January 2007, there is a new target valgrind in

the top level Makefile. This feature was implemented

for detection of memory leaks in the interpreter while running

the regression test cases. We found small memory leaks in our

time and mpfr extension instantly with this new

feature.

March 2007 saw much activity. First we introduced Victor Paesa’s new extension for the GD library. Then we merged Paul Eggert’s file floatcomp.c (floating point / long comparison) from Arnold’s source tree. We also merged the changes in regression test cases and documentation due to changed behaviour in numerical calculations (infinity and not a number) and formatting of these.

Stefan Tramm held a 5-minute Lightning Talk on xgawk at OSCON 06.

Hirofumi Saito took part in the Lightweight Language Conference 2006.

The new Reading XML Data with POSIX AWK describes a template

script getXMLEVENT.awk that allows us to write portable

scripts in a manner that is a mostly compatible subset of the

XMLgawk API. Such scripts can be run on any POSIX-compliant AWK

interpreter – not just xgawk.

The new Copying and Modifying with the xmlcopy.awk library script describes a library script for making slightly modified copies of XML data.

Thanks to Andrew’s mechanism for systematic reporting of patches

applied by Arnold to his gawk-stable tree, Andrew and I

caught up with recent changes in Arnold’s source tree.

As a consequence, xgawk is now based upon the recent official

gawk-3.1.6 release.

Jürgen Kahrs

Bremen, Germany

December, 2007

Previous: Foreword to Edition 0.3, Up: Preface [Contents][Index]

Foreword to Edition 1.2

In October 2014 the xgawk project has been restructured. The set

of gawk extensions has been splitted. There is now a separate

directory and distribution archive for each individual gawk

extension. The SourceForge project name has changed to

gawkextlib.

XMLgawk is no longer the name of the whole set of extensions, nor of the

individual XML extension. The XML extension is now called

gawk-xml. The xmlgawk name designates a script that

invokes gawk with the XML extension loaded and a convenience

xmllib.awk included.

The 1.2 edition of this manual includes documentation of the companion

xml*.awk libraries. The body of the manual has changed only a

little, but has been revised in order to update obsolete names,

references or versions of the related stuff.

Manuel Collado

February, 2017

FIXME: This document has not been completed yet. The incomplete portions have been marked with comments like this one.

Next: Reading XML Data with POSIX AWK, Previous: Preface, Up: General Introduction [Contents][Index]

1 AWK and XML Concepts

This chapter provides a (necessarily) brief intoduction to

XML concepts. For many applications of gawk XML processing,

we hope that this is enough. For more advanced tasks, you will need

deeper background, and it may be necessary to switch to other tools

like XSL processors

- How does XML fit into AWK’s execution model ?

- How to traverse the tree with gawk

- Looking closer at the XML file

Next: How to traverse the tree with gawk, Previous: AWK and XML Concepts, Up: AWK and XML Concepts [Contents][Index]

1.1 How does XML fit into AWK’s execution model ?

But before we look at XML, let us first reiterate how AWK’s

program execution works and what to expect from XML processing

within this framework. The gawk man page summarizes

AWK’s basic execution model as follows:

An AWK program consists of a sequence of pattern-action statements and optional function definitions.

pattern { action statements }

function name(parameter list) { statements }

… For each record in the input, gawk tests to see if it matches any pattern in the AWK program. For each pattern that the record matches, the associated action is executed. The patterns are tested in the order they occur in the program. Finally, after all the input is exhausted, gawk executes the code in the END block(s) (if any).

A look at a short and simple example will reveal the strength

of this abstract description. The following script implements

the Unix tool wc (well, almost, but not completely).

BEGIN { words=0 }

{ words+=NF }

END { print NR, words }

Before opening the file to be processed, the word counter is initialized with 0. Then the file is opened and for each line the number of fields (which equals the number of words) is added to the current word counter. After reading all lines of the file, the resulting word counter is printed as well as the number of lines.

Store the lines above in a file named wc.awk and invoke it with

gawk -f wc.awk datafile.xml

This kind of invocation will work on all platforms. In a Unix environment (or in the Cygwin Unix-emulation on top of Microsoft Windows) it is more comfortable to store the script above into an executable file. To do so, write a file named wc.awk, with the first line being

#!/usr/bin/gawk -f

followed by the lines above. Then make the file wc.wk executable with

chmod a+x wc.awk

and invoke it as

wc.awk datafile.xml

When looking at Figure 1.1 from top to bottom,

you will recognize that each line of the data file is represented

by a row in the figure. In each row you see NR (the number

of the current line) on the left and the pattern (the condition for

execution) and its action on the right. The first and

last rows represent BEGIN (initialization) and

END (finalization).

| Input data | NR (given) | Pattern | Action |

|---|---|---|---|

| Before reading | undefined | BEGIN | words=0 |

| Line | 1 | words+=NF | |

| Line | 2 | words+=NF | |

| Line | 3 | words+=NF | |

| Line | ... | words+=NF | |

| After reading | total lines | END | print NR, words |

Figure 1.1: Execution model of an AWK program with ASCII data, proceeding top to bottom

We could use this script to process any XML file. But the result it yielded would not be too meaningful to us. When processing XML files, you are not really interested in the number of lines or words. Take, for example, this XML file, a DocBook file to be precise.

<?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE book PUBLIC "-//OASIS//DTD DocBook XML V4.5//EN" "http://www.oasis-open.org/docbook/xml/4.5/docbookx.dtd"> <book id="hello-world" lang="en"> <bookinfo> <title>Hello, world</title> </bookinfo> <chapter id="introduction"> <title>Introduction</title> <para>This is the introduction. It has two sections</para> <sect1 id="about-this-book"> <title>About this book</title> <para>This is my first DocBook file.</para> </sect1> <sect1 id="work-in-progress"> <title>Warning</title> <para>This is still under construction.</para> </sect1> </chapter> </book>

Figure 1.2: Example of some XML data (DocBook file)

Reading through this jungle of angle brackets, you will notice that

the notion of a line is not an adequate concept to describe what

you see. AWK’s idea of records and fields only makes sense

in a rectangular world of textual data being stored in rows and columns.

This notion is blind to XML’s notion of structuring textual data into

markup blocks (like <title>Introduction</title>), with beginning

and ending being marked as such by angle brackets. Furthermore,

XML’s markup blocks can contain other blocks (like a chapter contains

a title and a para). XML sees textual data as

a tree with deeply nested nodes (markup blocks). A tree is a dynamic data structure;

some people call it a recursive structure because a tree contains

other trees, which may contain even other trees. These sub-trees are not

numbered (as rows and columns) but they have names. Now that we have a coarse

understanding of the structure of an XML file, we can choose an adequate way

of picturing the situation. XML data has a tree structure, so let’s draw the

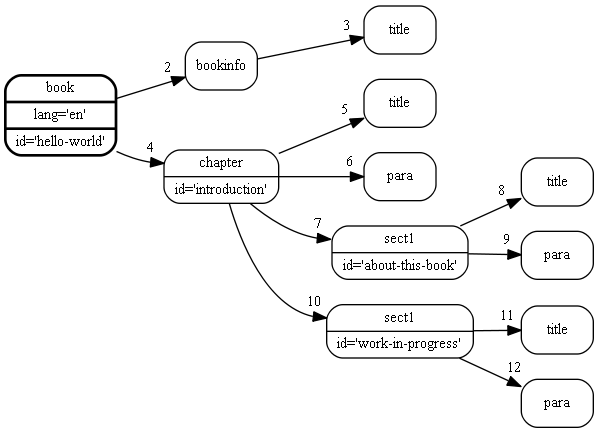

example file in Figure 1.2 above as a tree (see Figure 1.3).

Figure 1.3: XML data (DocBook file) as a tree

You can easily see that each markup block is drawn as a node in this tree. The edges in the tree reveal the nesting of the markup blocks in a much more lucid way than the textual representation. Each edge indicates that the markup block which has an arrow pointing to it, is contained in the markup block from which which the edge comes. Such edges indicate the "parent-child" relationship.

Next: Looking closer at the XML file, Previous: How does XML fit into AWK’s execution model ?, Up: AWK and XML Concepts [Contents][Index]

1.2 How to traverse the tree with gawk

Now, what could be the equivalent of a wc command

when dealing with such trees of markup blocks ? We could count

the nodes of the tree. You can store and invoke the following

script in the same way as you did for the previous script.

BEGIN { nodes = 0 }

XMLSTARTELEM { nodes ++ }

END { print nodes }

If you invoke this script with the data file in Figure 1.2, the number of nodes will be printed immediately:

gawk -l xml -f node_count.awk dbfile.xml 12

Notice the similarity between this example script and the original wc.awk which counts words. Instead of going over the lines, this script traverses the tree and increments the node counter each time a node is found. After a closer look you will find several differences between the previous script and the present one:

- The command line for

gawkhas an additional parameter-l xml. This is necessary for loading the XML extension into thegawkinterpreter so that thegawkinterpreter knows that the file to be opened is an XML file and has to be treated differently. - The node counting happens in an action which has a pattern.

Unlike the previous script (which counted on every line)

we are interested in counting the nodes only. The occurence

of a node (the beginning of a markup block) is indicated by

the

XMLSTARTELEMpattern. - There is no equivalent of the word count here, only the node count.

- It is not clear in which order the nodes of the tree are traversed.

The

bookinfonode and thechapternode are both positioned directly under thebooknode; but which is counted first ? The answer becomes clear when we return to the textual representation of the tree — textual order induces traversal order.

Do you see the numbers near the arrow heads ? These are the numbers indicating traversal order. The number 1 is missing because it is clear that the root node (framed with a bold line) is visited first. Computer Scientists call this traversal order depth-first because at each node, its children (the deeper nodes) are visited before going on with nodes at the same level. There are other orders of traversal ( breadth-first ) but the textual order in Figure 1.2 enforces the numbering in Figure 1.3.

The tree in Figure 1.3 is not balanced. The very last nodes are nested so deep that they are printed on the very right of the margin in Figure 1.3. This is not the case for the upper part of the drawing. Sometimes it is useful to know the maximum depth of such a tree. The following script traverses all nodes and at each node it compares actual depth and maximum depth to find and remember the largest depth.

@load "xml"

XMLSTARTELEM {

depth++

if (depth > max_depth)

max_depth = depth

}

XMLENDELEM { depth-- }

END { print max_depth }

Figure 1.4: Finding the maximum depth of the tree representation of an XML file with the script max_depth.awk

If you compare this script to the previous one, you will again notice some subtle differences.

-

@load "xml"is a replacement for the-l xmlon the command line. If the source text of your script is stored in an executable file, you should start the script with loading all extensions into the interpreter. The command line option-l xmlshould only be used as a shorthand notation when you are working with a one-line command line. - The variable

depthis not initialized. This is not necessary because all variables ingawkhave a value of 0 if they are used for the first time without a prior initialization. - The most

important difference you will find is the new pattern

XMLENDELEM. This is the counterpart of the patternXMLSTARTELEM. One is true upon entering a node, the other is true upon leaving the node. In the textual representation, these patterns mark the beginning and the ending of a markup block. Each time the script enters a markup block, thedepthcounter is increased and each time a markup block is left, thedepthcounter is decreased.

Later we will learn that this script can be shortened even more

by using the builtin variable XMLDEPTH which contains

the nesting depth of markup blocks at any point in time. With the

use of this variable, the script in Figure 1.4 becomes

one of these one-liners which are so typical for daily work with

gawk.

Previous: How to traverse the tree with gawk, Up: AWK and XML Concepts [Contents][Index]

1.3 Looking closer at the XML file

If you already know the basics of XML terminology, you can skip this section and advance to the next chapter. Otherwise, we recommend studying the O’Reilly book XML in a Nutshell, which is a good combination of tutorial and reference. Basic terminology can be found in chapter 2 (XML Fundamentals). If you prefer (free) online tutorials, then we recommend w3schools. See Links to the Internet, for additional valuable material.

Before going on reading, you should make sure you know the meaning of the following terms. Instead of leaving you on your own with learning these terms, we will give an informal and insufficient explanation of each of the terms. Always refer to Figure 1.2 for an example and consider looking the term up in one of the sources given above.

- Tag: name of a node

-

Attribute: variable having a name (

lang) and a value (en) -

Element: sub-tree, for example

bookinfoincludingtitle - Well-Formed: properly nested file; one tree with quoted, tag-wise distinct attributes

- DTD: formal description about which elements and attributes a file contains

- Schema: same use as DTD, but more detailed and formally itself XML (unlike DTD)

- Valid: conforming to a formal specification, usually given as a DTD or a Schema

-

Processing Instruction: screwed special-purpose element whose name is "?"; first data line often is

<?xml version="1.0" encoding="ISO-8859-1"?>

- Character Data: textual data inside an element between the tags

- Mixed Content: element that has character data inside it

- Encoding: name of a mapping between text symbols and byte sequence (ISO-8859-1)

- UTF-8: default encoding of XML; covers all text symbols available, possibly multi-byte

Still reading ? Be warned that these definitions are formally incorrect.

They are meant to get you on the right track.

Each ambitious propeller head will happily tear these definitions apart.

If you are seriously striving to become an XML propeller head yourself,

then you should not miss reading the original defining documents about the

XML technology.

The proper playing ground for anxious aspirants is the newsgroup

comp.text.xml.

I am glad none of those propeller heads reads gawk web pages — they would kill me.

Next: XML Core Language Extensions of gawk, Previous: AWK and XML Concepts, Up: General Introduction [Contents][Index]

2 Reading XML Data with POSIX AWK

Some users will try to avoid the use of the new language features described earlier. They want to write portable scripts; they have to refrain from using features which are not part of the standardized POSIX AWK. Since the XML extension of GNU Awk is not part of the POSIX standard, these users have to find different ways of reading XML data.

2.1 Steve Coile’s xmlparse.awk script

Implementing a complete XML reader in POSIX AWK would mean that all subtle details of

Unicode encodings had to be handled. It doesn’t make sense to go into

such details with an AWK script. But in 2001, Steve Coile wrote a parser

which is good enough if your XML data consists of simple tagged blocks

of ASCII characters. His script is available on the Internet as

xmlparse.awk.

The source code of xmlparse.awk is well documented and ready-to-use

for anyone having access to the Internet.

Begin your exploration of xmlparse.awk by downloading it.

As of Summer 2007, there is a typo in the file that has to be

corrected before you can start to work with the parser. Insert

a hashmark character (#) in front of the comment in line

342.

wget ftp://ftp.freefriends.org/arnold/Awkstuff/xmlparser.awk vi xmlparser.awk 342G i# ESC :wq

While you’re editing the parser script, have a look at the comments.

This is a well-documented script that explains its implementation

as well as some use cases. For example, the header summarizes almost

all details that a user will need to remember (see Figure 2.1).

There is a negligible inconsistency in the header: The file is really

named xmlparser.awk and not xmlparse.awk as stated in the

header. From a user’s perspective, the most important constraint to

keep in mind is that this XML parser needs a modern variant of

AWK. This means a POSIX compliant AWK; the old Solaris implentation

oawk will not be able to interpret this XML parser script as

intended. Invoke the XML parser for the first time with

awk -f xmlparser.awk docbook_chapter.xml

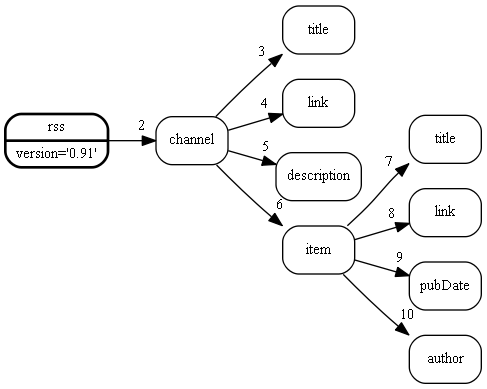

Compare the output to the original file’s content (see Figure 1.2) and its depiction as a tree (see Figure 1.3). You will notice that the first column of the output always contains the type of the items as they were parsed sequentially:

pi xml version="1.0" encoding="UTF-8" data \n decl DOCTYPE book PUBLIC "-//OASIS//DTD DocBook..."\n "http://www.oasis-open..." data \n\n begin BOOK attrib id value hello-world attrib lang value en data \n \n begin BOOKINFO data \n begin TITLE data Hello, world end TITLE ... etc. ...

This is in accordance with the guiding principles explained in the header of the parser script. Note that the description in Figure 2.1 is incomplete. More details will be provided below.

The script parses the XML data and saves each parsed item in two arrays:

-

type[3]indicates the type of the 3rd parsed XML data item. This may be any of-

"error"when an invalid item has been parsed or another error has occurred. In this case,item[3]contains the text of the error message. -

"begin"when an opening tag has been parsed. -

"end"when a closing tag has been parsed. In the case oftype[3]containing"begin"or"end",item[3]contains the name of the tag. -

"attrib"when an attribute’s name has been parsed. -

"value"when an attribute’s value has been parsed. In the case oftype[3]containing"attrib"or"value",item[3]contains the attribute’s name or value. -

"data"when the data between opening and closing tags has been parsed. -

"cdata"when character data has been parsed. -

"comment"when a comment has been parsed. -

"pi"when a processing instruction has been parsed.

-

-

item[3]contains data depending ontype[3], as distinguished in the item list above.

While you proceed reading this web page, you will notice that

the basic idea is similar to what gawk-xml does. Especially the approach

described in gawk-xml Core Language Interface Summary as

Concise Interface - Reduced set of variables shared by all events

will look familiar. The script as it is was not designed to be a

modular building block. Any application will not simply include

the xmlparser.awk file, but copy it textually and modify

the copy. Look into the original script once more and have a

closer look at the final END pattern. You will find suggestions

for several useful applications inside the END pattern.

##############################################################################

#

# xmlparse.awk - A simple XML parser for awk

#

# Author: Steve Coile <scoile@csc.com>

#

# Version: 1.0 (20011017)

#

# Synopsis:

#

# awk -f xmlparse.awk [FILESPEC]...

#

# Description:

#

# This script is a simple XML parser for (modern variants of) awk.

# Input in XML format is saved to two arrays, "type" and "item".

#

# The term, "item", as used here, refers to a distinct XML element,

# such as a tag, an attribute name, an attribute value, or data.

#

# The indexes into the arrays are the sequence number that a

# particular item was encountered. For example, the third item's

# type is described by type[3], and its value is stored in item[3].

#

# The "type" array contains the type of the item encountered for

# each sequence number. Types are expressed as a single word:

# "error" (invalid item or other error), "begin" (open tag),

# "attrib" (attribute name), "value" (attribute value), "end"

# (close tag), and "data" (data between tags).

#

# The "item" array contains the value of the item encountered

# for each sequence number. For types "begin" and "end", the

# item value is the name of the tag. For "error", the value is

# the text of the error message. For "attrib", the value is the

# attribute name. For "value", the value is the attribute value.

# For "data", the value is the raw data.

#

# WARNING: XML-quoted values ("entities") in the data and attribute

# values are *NOT* unquoted; they are stored as-is.

#

###############################################################################

Figure 2.1: Usage explained in the header of xmlparser.awk

- By checking for the occurence of an error with

if (type[idx] == "error") { ... }it is quite easy to implement a script that checks for well-formedness of some XML data.

- Several attempts have been made to introduce a simplified XML

that is easier to parse by shell scripts. Simplication of the XML

and output in a convenient line-by-line format can be implemented

with the following code fragment inside an

ENDpattern. It demonstrates how to go through all parsed items sequentially and handle each of the types appropriately.for ( n = 1; n <= idx; n += 1 ) { if ( type[n] == "attrib" ) { } else if ( type[n] == "begin" ) { } else if ( type[n] == "cdata" ) { } else if ( type[n] == "comment" ) { } else if ( type[n] == "data" ) { } else if ( type[n] == "decl" ) { } else if ( type[n] == "end" ) { } else if ( type[n] == "error" ) { } else if ( type[n] == "pi" ) { } else if ( type[n] == "value" ) { } } - One application of the framework just mentioned is an outline

script like the one in Figure 3.2. Producing

an outline output like the one in Figure 3.1 is a

matter of a few lines in AWK if you modify the

xmlparser.awkscript. Notice that this is done after the complete XML data has been read. So, at the moment of processing, the complete XML data is somehow saved in AWK’s memory, imposing some limit on the size of the data that can be processed.XMLDEPTH=0 for (n = 1; n <= idx; n += 1 ) { if ( type[n] == "attrib") { printf(" %s=", item[n] ) } else if (type[n] == "value" ) { printf("'%s'", item[n] ) } else if (type[n] == "begin" ) { printf("\n%*s%s", 2*XMLDEPTH,"", item[n]) XMLDEPTH ++ } else if (type[n] == "end" ) { XMLDEPTH -- } }If you compare the output of this application with Figure 3.1 you will notice only two differences. The first is the newline character before the very first tag; the second is the names of the tags. The

xmlparser.awkscript saves the names of the tags in uppercase letters, the exact tag name cannot be revovered without changing the internals of the XML parsing mechanism.

Next: A portable subset of gawk-xml, Previous: Steve Coile’s xmlparse.awk script, Up: Reading XML Data with POSIX AWK [Contents][Index]

2.2 Jan Weber’s getXML script

In 2005, Jan Weber posted a similar XML parser to the newsgroup

comp.lang.awk.

You can use Google to search for the script getXML and

copy it into a file. Unfortunately, Jan tried to make the script

as short as possible and often put several statements on one line.

Readability of the script has suffered severely and if you intend

to analyse the script, be prepared that some editing may be

necessary to understand it. Again, while you’re editing the parser

script, have a look at the comments. Jan has commented the one

central function of the script from a user’s perspective as

follows (see Figure 2.2). The basic approach was

taken over from the xmlparser.awk script. But there were

several constraints Jan tried to satisfy in writing his XML parser:

- The function

getXMLallows to read multiple XML files in parallel. - As a consequence, each XML event happens upon returning

from the

getXMLfunction, similar to thegetlinemechanism of AWK (see Figure 2.3). Furthermore, the user application reads files in theBEGINaction of the AWK script, not in theENDaction. - The exact names of tags and attributes are preserved, no change in case is done by the XML parser.

- Parameter passing resembles the approach described in

gawk-xmlCore Language Interface Summary as Concise Interface - Reduced set of variables shared by all events much more closely. Most importantly, attribute names and values are passed along with the tag they belong to. So, granularity of events is more coarse and user-friendly. - While the

xmlparser.awkscript stored the complete XML data into two arrays during the parsing process,getXML.awkpasses one XML event at a time back to the calling application, avoiding the unwanted waste of memory. This means, parsing large XML files becomes possible (although it doesn’t make too much sense). - This XML parser runs with the

nawkimplementation of the AWK language that comes with the Solaris Operating System. As a consequence, this XML parser is probably the most portable of all parsers described in this web page.

Again, we will demonstrate the usage of this XML parser by

implementing an outline script like the one in Figure 3.2.

Change the file getXML and replace the existing BEGIN

action with the script in Figure 2.3.

Invoke the new outline parser for the first time with

awk -f getXML docbook_chapter.xml

Compare the output to the original file’s content (see Figure 1.2),

its depiction as a tree (see Figure 1.3) and to the output

of the original outline tool that comes with the expat

parser (see Figure 3.1).

The result is almost identical to Figure 3.1, except for

one minor detail: The very first line is a blank line here.

## # getXML( file, skipData ): # read next xml-data into XTYPE,XITEM,XATTR # Parameters: # file -- path to xml file # skipData -- flag: do not read "DAT" (data between tags) sections # External variables: # XTYPE -- type of item read, e.g. "TAG"(tag), "END"(end tag), "COM"(comment), "DAT"(data) # XITEM -- value of item, e.g. tagname if type is "TAG" or "END" # XATTR -- Map of attributes, only set if XTYPE=="TAG" # XPATH -- Path to current tag, e.g. /TopLevelTag/SubTag1/SubTag2 # XLINE -- current line number in input file # XNODE -- XTYPE, XITEM, XATTR combined into a single string # XERROR -- error text, set on parse error # Returns: # 1 on successful read: XTYPE, XITEM, XATTR are set accordingly # "" at end of file or parse error, XERROR is set on error # Private Data: # _XMLIO -- buffer, XLINE, XPATH for open files ##

Figure 2.2: Usage of Jan Weber’s getXML parser function

But some implementation details are noteworthy. Here, granularity of items

is different: All attributes are reported along with their tag item.

This results from a design decision: The getXML function

uses several variables to pass larger amounts of data back to the

caller. Finally a detail that did not become so obvious in this example.

Notice the second parameter of the getXML function (skipData).

Jan introduced an option that allows skipping textual data in

between tags (mixed content).

#!/usr/bin/nawk -f

BEGIN {

XMLDEPTH=0

while ( getXML(ARGV[1],1) ) {

if ( XTYPE == "TAG" ) {

printf("\n%*s%s", 2*XMLDEPTH, "", XITEM)

XMLDEPTH++

for (attrName in XATTR)

printf(" %s='%s'", attrName, XATTR[attrName])

} else if ( XTYPE == "END" ) {

XMLDEPTH--

}

}

}

Figure 2.3: Outlining an XML file with Jan Weber’s getXML parser

Previous: Jan Weber’s getXML script, Up: Reading XML Data with POSIX AWK [Contents][Index]

2.3 A portable subset of gawk-xml

Jan Webers’s portable script in the previous section was

a significant advance over Steve Coile’s script. Handling of XML

events feels much more like it does in the gawk-xml API. But after

some time of working with the script, the differences between it

and the gawk-xml API become a bit annoying to remember. As a

consequence, we took Jan’s script, copied it into a new script

file getXMLEVENT.awk and changed its inner working so

as to minimize differences to the gawk-xml API. If you intend to

use the script as a template for your own work, search for the

file getXMLEVENT.awk in the following places:

- The

gawk-xmldistribution file contains a copy in the awklib/xml directory. - If

gawk-xml/usr/share/awk/on GNU/Linux machines).

The file getXMLEVENT.awk as it is serves well if you want

to start writing a script from scratch. It already contains an

event-loop in the BEGIN pattern of the script.

Just take the main body of the event-loop (the while

loop) and change those parts that react on incoming events of the

XML event stream.

But in the remainder of this section, we will assume that

we already have a script and we intend to port it.

Attempting to describe the approach in the most useful way,

we will go through

two typical use-cases of the getXMLEVENT.awk template file.

First we look at the necessary steps for taking an existing script

written for gawk-xml and making it portable for use on Solaris

machines (to name just the worst case scenario). Secondly, we go

the other way round: take an existing portable script and describe

the necessary steps for converting it into an gawk-xml script.

- Converting a script from

gawk-xmlinto portable subset - Converting a script from portable subset into

gawk-xml

2.3.1 Converting a script from gawk-xml into portable subset

The general approach in porting a script that uses gawk-xml

features to a portable script is always the same. No matter

if we port the original outline script (see Figure 3.2)

or if we take a non-trivial application like the DTD generator

(see Generating a DTD from a sample file).

Now we proceed through the following series of steps.

- We always start by first copying the template file getXMLEVENT.awk into a new file (dtdgport.awk in the case of the DTD generator).

- Near the top of the new script file, remove the main body of the original event loop.

- Replace the original event loop with the pattern-action pairs

from the application. In the case of the DTD generator, take

the first part of the source code (Figure 7.19)

and insert the

XMLSTARTELEMaction into the event loop. - Append the

ENDpattern of Figure 7.19 verbatim after the event loop. - Append the second part of the application (containing function declarations in Figure 7.20) verbatim.

- Take the resulting application source file and try if it

really works in the expected way. Compare the resulting

output to Figure 7.18. You will find that the

resulting output (a DTD) is indeed exactly the same.

awk -f dtdgport.awk docbook_chapter.xml

It is amazing how simple and effective it is to turn an gawk-xml

script into a portable script. After all, you should never forget

about the limitations of the portable script. This tiny little

XML parser is far from being a complete XML parser. Most notably,

it misses the ability to read files with multi-byte characters

and other Unicode encoding details. Experience tells us that sooner

or later your tiny little parser will stumble across a customer-

supplied XML file with special characters in it (copyright marks,

typographic dashes, european accent characters, or even chinese

characters). Then the need arises to port the script back to the

full gawk-xml environment with its full XML parsing capability.

When you eventually reach this point, continue reading the next

subsection and you will find advice on porting your script

back to gawk-xml.

2.3.2 Converting a script from portable subset into gawk-xml

Conversion of scripts from the portable subset to full gawk-xml

is even easier. This ease derives from the similarity of the

portable subset’s event-loop with the API in

Concise Interface - Reduced set of variables shared by all events

as described in the

gawk-xml Core Language Interface Summary.

The main point in porting is replacing the invocation of

getXMLEVENT with getline. Step through the

following task list and you will soon arrive at an application

that supports all subtleties of the XML data.

- Copy the application source code file into a new source code file.

- In the new source code file, insert

@load "xml"at the top of the file. - In the

BEGINpattern, convert the condition in thewhilestatement of the event-loop.while (getXMLEVENT(ARGV[1])) {gets transformed into

while (getline > 0) { - Leave the rest of the

BEGINpattern with its event-loop unchanged. - Remove the functions

getXMLEVENT,unescapeXML, andcloseXMLEVENT. - Take the resulting application source file and try if it really works in the expected way. Compare the resulting output.

Next: Some Convenience with the xmllib library, Previous: Reading XML Data with POSIX AWK, Up: General Introduction [Contents][Index]

3 XML Core Language Extensions of gawk

In How to traverse the tree with gawk, we have concentrated on the tree

structure of the XML file in Figure 1.3.

We found the two patterns XMLSTARTELEM and XMLENDELEM

which help us following the process of tree traversal. In this

chapter we will find out what the other XML-specific patterns

are. All of them will be used in example scripts and their meaning

will be described informally.

- Checking for well-formedness

- Printing an outline of an XML file

- Pulling data out of an XML file

- Character data and encoding of character sets

- Dealing with DTDs

- Sorting out all kinds of data from an XML file

3.1 Checking for well-formedness

One of the advantages of using the XML format for storing data is that there are formalized methods of checking correctness of the data. Whether the data is written by hand or it is generated automatically, it is always advantageous to have tools for finding out if the new data obeys certain rules (is a tag misspelt ? another one missing ? a third one in the wrong place ?).

These mechanisms for checking correctness are applied at

different levels. The lowest level being well-formedness.

The next higher levels of correctness-check are the level of

the DTD (see Generating a DTD from a sample file)

and (even higher, but not required yet by standards)

the Schema. If you have a DTD (or Schema) specification for your

XML file, you can hand it over to a validation tool, which applies

the specification, checks for conformance and tells you the result.

A simple tool for validation against a DTD is

xmllint,

which is part of libxml and therefore installed on

most GNU/Linux systems. Validation against a Schema can be

done with more recent versions of xmllint or with

the xsv

tool.

There are two reasons why validation is currently not

incorporated into the gawk interpreter.

- Validation is not trivial and only DTD-validation has reached a proper level of standardization, support and stability.

- We want a tool that can process all well-formed XML files, not just a tool for processing clean data. A good tool is one that you can rely on and use for fixing problems. What would you think of a car that rejected to drive outside just because there is some mud on the street and the sun isn’t shining ?

Here is a script for testing well-formedness of XML data.

The real work of checking well-formedness is done by the

XML parser incorporated into gawk. We are only

interested in the result and some details for error

diagnostic and recovery.

@load "xml"

END {

if (XMLERROR)

printf("XMLERROR '%s' at row %d col %d len %d\n",

XMLERROR, XMLROW, XMLCOL, XMLLEN)

else

print "file is well-formed"

}

As usual, the script starts with switching gawk

into XML mode. We are not interested in the content of

the nodes being traversed, therefore we have no action

to be triggered for a node. Only at the end (when the

XML file is already closed) we look at some variables

reporting success or failure. If the variable

XMLERROR ever contains anything other than 0

or the empty string, there is an error in parsing and

the parser will stop tree traversal at the place where

the error is. An explanatory message is contained in

XMLERROR (whose contents depends on the specific

parser used on this platform). The other variables in

the example contain the line number and the column in

which the XML file is formed badly.

Next: Pulling data out of an XML file, Previous: Checking for well-formedness, Up: XML Core Language Extensions of gawk [Contents][Index]

3.2 Printing an outline of an XML file

When working with XML files, it is sometimes necessary to gain some oversight over the structure an XML file. Ordinary editors confront us with a view such as in Figure 1.2 and not a pretty tree view such as in Figure 1.3. Software developers are used to reading text files with proper indentation like the one in Figure 3.1.

book lang='en' id='hello-world'

bookinfo

title

chapter id='introduction'

title

para

sect1 id='about-this-book'

title

para

sect1 id='work-in-progress'

title

para

Figure 3.1: XML data (DocBook file) as a tree with proper indentation

Here, it is a bit

harder to recognize hierarchical dependencies among the

nodes. But proper indentation allows you to oversee files

with more than 100 elements (a purely graphical view of

such large files gets unbearable). Figure 3.1

was inspired by the tool outline that comes with

the Expat XML parser.

The outline tool produces such an indented output

and we will now write a script that imitates this kind

of output.

@load "xml"

XMLSTARTELEM {

printf("%*s%s", 2*XMLDEPTH-2, "", XMLSTARTELEM)

for (i=1; i<=NF; i++)

printf(" %s='%s'", $i, XMLATTR[$i])

print ""

}

Figure 3.2: outline.awk produces a tree-like outline of XML data

The script outline.awk in Figure 3.2 looks very similar to the

other scripts we wrote earlier, especially the script

max_depth.awk, which also traversed nodes and

remembered the depth of the tree while traversing. The

most important differences are in the lines with the

print statements. For the first time, we don’t

just check if the XMLSTARTELEM variable contains

a tag name, but we also print the name out, properly indented

with a printf format statement (two blank characters

for each indentation level).

At the end of the description of the max_depth.awk

script in Figure 1.4 we already mentioned the

variable XMLDEPTH, which is used here as a replacement

of the depth variable. As a consequence, bookkeeping

with the depth variable in an action after the

XMLENDELEM is not necessary anymore. Our script has

become shorter and easier to read.

The other new phenomenon in this script is the associative

array XMLATTR. Whenever we enter a markup block

(and XMLSTARTELEM is non-empty), the array XMLATTR

contains all the attributes of the tag. You can find out the

value of an attribute by accessing the array with the attribute’s

name as an array index. In a well-formed XML file, all the attribute

names of one tag are distinct, so we can be sure that each attribute

has its own place in the array. The only thing that’s left to do is

to iterate over all the entries in the array and print name and value

in a formatted way. Earlier versions of this script really iterated

over the associative array with the for (i in XMLATTR)

loop. Doing so is still an option, but in this case we wanted to

make sure that attributes are printed in exactly the same oder

that is given in the original XML data. The exact order of attribute

names is reproduced in the fields $1 .. $NF. So the

for loop can iterate over the attributes names in the

fields $1 .. $NF and print the attribute values

XMLATTR[$i].

Please note that, staring with gawk 4.2 which supports version 2 of the

API, the XMLATTR values are considered to be user input and are eligible

for the strnum attribute. So if the values appear to be numeric, gawk

will treat them as numbers in comparisons. This feature was not available prior

to version 2 of the gawk API.

Next: Character data and encoding of character sets, Previous: Printing an outline of an XML file, Up: XML Core Language Extensions of gawk [Contents][Index]

3.3 Pulling data out of an XML file

The script we are analyzing in this section produces

exactly the same output as the script in the previous

section. So, what’s so different about it that

we need a second one ? It is the programming style which

is employed in solving the problem at hand. The previous

script was written so that the pattern XMLSTARTELEM

is positioned within the pattern.

This is ordinary AWK programming style, but it is not the way

users of other programming languages were brought up with. In

a procedural language, the software developer expects that he

himself determines control flow within a program. He writes

down what has to be done first, second, third and so on.

In the pattern-action model of AWK, the novice software

developer often has the oppressive feeling that

- he is not in control

- events seem to crackle down on him from nowhere

- data flow seems chaotic and invariants don’t exist

- assertions seem impossible

This feeling is characteristic for a whole class of programming environments. Most people would never think of the following programming environments to have something in common, but they have. It is the absence of a static control flow which unites these environments under one roof:

- In GUI frameworks like the X Window system, the main program is a trivial event loop – the main program does nothing but wait for events and invoke event-handlers.

- In the Prolog programming language, the main program has the form of a query – and then the Prolog interpreter decides which rules to apply to solve the query.

- When writing a compiler with the

lexandyacctools, the main program only invokes a functionyyparse()and the exact control flow depends on the input source which controls invocation of certain rules. - When writing an XML parser with the Expat XML parser, the main program registers some callback handler functions, passes the XML source to the Expat parser and the detailed invocation of callback function depends on the XML source.

- Finally, AWK’s pattern-action encourages writing scripts that have no main program at all.

Within the context of XML, a terminology has been invented which distinguishes the procedural pull style from the event-guided push style. The script in the previous section was an example of a push-style script. Recognizing that most developers don’t like their program’s control flow to be pushed around, we will now present a script which pulls one item after the other from the XML file and decides what to do next in a more obvious way.

@load "xml"

BEGIN {

while (getline > 0) {

switch (XMLEVENT) {

case "STARTELEM": {

printf("%*s%s", 2*XMLDEPTH-2, "", XMLSTARTELEM)

for (i=1; i<=NF; i++)

printf(" %s='%s'", $i, XMLATTR[$i])

print ""

}

}

}

}

One XML event after the other is pulled out of the data

with the getline command. It’s like feeling each grain

of sand pour through your fingers. Users who prefer this style

of reading input will also appreciate another novelty: The variable

XMLEVENT. While the push-style script in

Figure 3.2 used the event-specific variable

XMLSTARTELEM to detect the occurrence of a new XML element,

our pull-style script always looks at the value of the same

universal variable XMLEVENT to detect a new XML element.

We will dwell on a more detailed example in Figure 7.14.

Formally, we have a script that consists of one BEGIN

pattern followed by an action which is always invoked. You

see, this is a corner case of the pattern-action model

which has been reduced so wide that its essence has disappeared.

Instead of the patterns you now see the cases of switch

statement, embedded into a while loop (for reading the

file item-wise).

Obviously, we have explicite conditionals now, instead of the

implicite ones we used formerly. The actions invoked within

the case conditions are the same we have seen in the

push approach.

Next: Dealing with DTDs, Previous: Pulling data out of an XML file, Up: XML Core Language Extensions of gawk [Contents][Index]

3.4 Character data and encoding of character sets

All of the example scripts we have seen so far have one thing in common: they were only interested in the tree structure of the XML data. None of them treated the words between the tags. When working with files like the one in Figure 1.2, you are sometimes more interested in the words that are embedded in the nodes of Figure 1.3. XML terminology calls these words character data. In the case of a DocBook file one could call these words which are interspersed between the tags the payload of the whole document. Sometimes one is intersted in freeing this payload from all the useless stuff in angle brackets and extract the character data from the file. The structure of the document may be lost, but the bare textual content in ASCII is revealed and ready for importing it into an application software which does not understand XML.

Hello, world Introduction This is the introduction. It has two sections About this book This is my first DocBook file. Warning This is still under construction.

Figure 3.3: Example of some textual data from a DocBook file

You may wonder where the blank lines between the text lines come from. They are part of the XML file; each line break in the XML outside the tags (even the one after the closing angle bracket of a tag) is character data. The script which produces such an output is extremely simple.

@load "xml"

XMLCHARDATA { printf $0 }

Figure 3.4: extract_characters.awk extracts textual data from an XML file

Each time some character data is parsed, the XMLCHARDATA

pattern is set to 1 and the character data itself is stored into

the variable $0. A bit unusual is the fact that the text

itself is stored into $0 and not in XMLCHARDATA.

When working with text, one often needs the text split into fields

like AWK does it when the interpreter is not in XML mode. With

the words stored in fields $1 … $NF, we now

have found a way to refer to isolated words again; it would be

easy to extend the script above so that it counts words like the

script wc.awk did.

Most texts are not as simple as Figure 3.3.

Textual data in computers is not limited to 26 characters and some

punctuation marks anymore. On all keyboards we have various kinds

of brackets (<, [ and {) and in Europe we have had things like the

ligature (Æ) or the umlaut (ü) for centuries. Having thousands

of symbols is not a problem in itself, but it became a problem when

software applications started representing these symbols with different

bytes (or even byte sequences). Today we have a standard for

representing all the symbols in the world with a byte sequence –

Unicode.

Unfortunately, the accepted standard came too late. Earlier

standardization efforts had created ways of representing subsets

of the complete symbol set, each subset containing 256 symbols

which could be represented by one byte. These subsets had names

which are still in use today (like ISO-8859-1 or IBM-852 or ISO-2022-JP).

Then came the programming language Java with a char data type

having 16 bits for each character. It turned out that 16 bits were

also not enough to represent all symbols. Having recognized the fixed

16 bit characters as a failure, the standards organizations finally

established the current Unicode standard. Today’s Unicode character

set is a wonderful catalog of symbols – the book mentioned above

needs more than a 1000 pages to list them all.

And now to the ugly side of Unicode:

- The names of the 8 bit character sets are still in use and have to be supported by XML parsers and the software built upon them.

- Symbols in the Unicode catalog have an unambiguous number, but their number may be encoded in many different ways with varying numbers of bytes per character.

- When displaying a text, you have to decide which encoding you want to use; if the text is encoded differently, you will see strange symbols that you have never dreamed of.

Notice that the character set and the character encoding are very different notions. The former is a set in the mathematical sense while the latter is a way of mapping the number of the character into a byte sequence of varying length. To make things worse: The use of these terms is not consistent – neither the XML specification nor the literature distinguishes the terms cleanly. For example, take the citation from the excellent O’Reilly book XML in a Nutshell in chapter 5.2:

5.2 The Encoding Declaration

Every XML document should have an encoding declaration as part of its XML declaration. The encoding declaration tells the parser in which character set the document is written. It’s used only when other metadata from outside the file is not available. For example, this XML declaration says that the document uses the character encoding US-ASCII:

<?xml version="1.0" encoding="US-ASCII" standalone="yes"?>This one states that the document uses the Latin-1 character set, though it uses the more official name ISO-8859-1:

<?xml version="1.0" encoding="ISO-8859-1"?>Even if metadata is not available, the encoding declaration can be omitted if the document is written in either the UTF-8 or UTF-16 encodings of Unicode. UTF-8 is a strict superset of ASCII, so ASCII files can be legal XML documents without an encoding declaration.

Several times a character set name is assigned to an encoding declaration – the book does it and the XML samples do it too. Only in the last paragraph the usage of terms is clean: UTF-8 is the default way of encoding a character into a byte sequence.

After this unpleasant excursion into the cultural history of

textual data in occidental societies, let’s get back to gawk

and see how the concepts of the encoding and the character set

are incorporated into the language.

Three variables are all that you need to know, but each of

them comes from a different context. Take care that you recognize

the difference between the XML document, gawk’s internal

data handling and the influence of an environment variable from

the shell environment setting the locale.

-

XMLATTR["ENCODING"]is a pattern variable that (when non-empty andXMLDECLARATIONis triggered) contains the name of the character encoding in which the XML file was originally encoded. This information comes from the first line of the XML file (if the line contains the usual XML header). There is no use in overwriting this variable, the variable is meant to tell you what’s in the XML data and nothing happens when you changeXMLATTR["ENCODING"]. -

XMLCHARSETis the variable to change if you want to see the XML data converted to a character set of your own choice. When you set this variable, thegawkinterpreter will remember the character set of your choice. But this choice will take effect only upon opening the next file. A change ofXMLCHARSETwill not influence XML data from a file that has already been opened earlier for reading. -

LANGis an environment variable of your operating system. It tells thegawkinterpreter which value to use forXMLCHARSETon initial startup when nothing has been said about it in the user’s script. In the absence of any setting forLANG,US-ASCIIis used as the default encoding. Up to now, we have always talked about the encoding and character set of the data to be processed. Remember that the source code of your program is also written in some character set. It is usually theLANGcharacter set that is used while writing programs. Imagine what happens when you have a program containing a character from your native character set, for which there is no encoding in the character set used at run-time. The alert reader will notice how consequentgawkis in following the Unicode tradition of mixing up character encoding and character set.

After so much scholastic reasoning, you might be inclined

to presume that character sets and encodings are hardly of

any use in real life (except for befuddling the novice).

The following example should dispel your doubts.

In real life, circumstance transcending sensible reasoning

could require you to import the text in Figure 3.3

into a Microsoft Windows application. Contemporary flavours of

Microsoft Windows prefer to store textual data in UTF-16.

So, a script for converting the text to UTF-16 would

be a nice tool to have – and you already have such a tool.

The script extract_characters.awk in Figure 3.4

will do the job, if you tell the gawk interpreter to use

the UTF-16 encoding when reading the DocBook file.

Two alternatives ways of reaching this target arise:

- Change the script and insert a line setting

XMLCHARSETtoUTF-16. After invocation, thegawkinterpreter will now print the same data as in Figure 3.3, but converted toUTF-16.BEGIN { XMLCHARSET="utf-16" } - Do not change the script, but before invoking the

gawkinterpreter, set the environment variableLANGtoUTF-16.

The result will be the same in both cases, provided your operating system supports these character sets and encodings. In real life, it is probably a better idea to avoid the second of these approaches because it requires changes (and possibly side-effects) at the level of the command line shell.

Next: Sorting out all kinds of data from an XML file, Previous: Character data and encoding of character sets, Up: XML Core Language Extensions of gawk [Contents][Index]

3.5 Dealing with DTDs

Earlier in this chapter we have seen that gawk does

not validate XML data against a DTD. The declaration of a document

type in the header of an XML file is an optional part of the data,

not a mandatory one. If such a declaration is present (like it is

in Figure 1.2), the reference to the DTD will not be

resolved and its contents will not be parsed. However, the presence

of the declaration will be reported by gawk. When the declaration

starts, the variable XMLSTARTDOCT contains the name of the

root element’s tag; and later, when the declaration ends, the variable

XMLENDDOCT is set to 1. In between, the array variable XMLATTR

will be populated with the values of the public identifier

of the DTD (if any) and the value of the system’s identifier of the DTD

(if any). Other parts of the declaration (elements, attributes and entities)

will not be reported.

@load "xml"

XMLDECLARATION {

version = XMLATTR["VERSION" ]

encoding = XMLATTR["ENCODING" ]

standalone = XMLATTR["STANDALONE" ]

}

XMLSTARTDOCT {

root = XMLSTARTDOCT

pub_id = XMLATTR["PUBLIC" ]

sys_id = XMLATTR["SYSTEM" ]

intsubset = XMLATTR["INTERNAL_SUBSET"]

}

XMLENDDOCT {

print FILENAME

print " version '" version "'"

print " encoding '" encoding "'"

print " standalone '" standalone "'"

print " root id '" root "'"

print " public id '" pub_id "'"

print " system id '" sys_id "'"

print " intsubset '" intsubset "'"

print ""

version = encoding = standalone = ""

root = pub_id = sys_id = intsubset ""

}

Figure 3.5: db_version.awk extracts details about the DTD from an XML file

Most users can safely ignore these variables if they are only interested in the data itself. But some users may take advantage of these variables for checking requirements of the XML data. If your data base consists of thousands of XML file of diverse origins, the public identifier of their DTDs will help you gain an oversight over the kind of data you have to handle and over potential version conflicts. The script in Figure 3.5 will assist you in analyzing your data files. It searches for the variables mentioned above and evaluates their content. At the start of the DTD, the tag name of the root element is stored; the identifiers are also stored and finally, those values are printed along with the name of the file which was analyzed. After each DTD, the remembered values are set to an empty string until the DTD of the next file arrives.

In Figure 3.6 you can see an example output of

the script in Figure 3.5. The first entry is the

file we already know from Figure 1.2. Obviously, the first

entry is a DocBook file (English version 4.2) containing a

book element which has to be validated against a local

copy of the DTD at CERN in Switzerland. The second file is a

chapter element of DocBook (English version 4.1.2) to

be validated against a DTD on the Internet. Finally, the third

entry is a file describing a project of the GanttProject application.

There is only a tag name for the root element specified, a DTD

does not seem to exist.

data/dbfile.xml version '' encoding '' standalone '' root id 'book' public id '-//OASIS//DTD DocBook XML V4.2//EN' system id '/afs/cern.ch/sw/XML/XMLBIN/share/www.oasis-open.org/docbook/xmldtd-4.2/docbookx.dtd' intsubset '' data/docbook_chapter.xml version '' encoding '' standalone '' root id 'chapter' public id '-//OASIS//DTD DocBook XML V4.1.2//EN' system id 'http://www.oasis-open.org/docbook/xml/4.1.2/docbookx.dtd' intsubset '' data/exampleGantt.gan version '1.0' encoding 'UTF-8' standalone '' root id 'ganttproject.sourceforge.net' public id '' system id '' intsubset ''

Figure 3.6: Details about the DTDs in some XML files

You may wish to make changes to this script if you need it in

daily work. For example, the script currently reports nothing

for files which have no DTD declaration in them. You can easily

change this by appending an action for the END rule which

reports in case all the variables root, pub_id

and sys_id are empty. As it is, the script parses the

entire XML file, although the DTD is always positioned at the

top, before the root element. Parsing the root element is

unnecessary and you can improve the speed of the script significantly

if you tell it to stop parsing when the first element (the root

element) comes in.

XMLSTARTELEM { nextfile }

Previous: Dealing with DTDs, Up: XML Core Language Extensions of gawk [Contents][Index]

3.6 Sorting out all kinds of data from an XML file

If you have read this web page sequentially until now, you have understood how to read an XML file and treat it as a tree. You also know how to handle different character encodings and DTD declarations. This section is meant to give you an overview of what other patterns there are when you work with XML files. The overview is meant to be complete in the sense that you will see the name of every pattern involved and an example of usage. Conceptually, you will not see much new material, this is only about some new variables for passing information from the XML file. Here are the new patterns:

- XMLPROCINST contains the name of a processing instruction, while $0 contains its contents.

- XMLCOMMENT indicates an XML comment. The comment itself is in $0.

-

XMLDECLARATION indicates that the XML version number from

the first line of the XML file can be read from

XMLATTR["VERSION"]. - XMLUNPARSED indicates a text that did not fit into any other category. Its contents is in $0.

The following script is meant to demonstrate all XML patterns

and variables. It can help you while you are debugging other

scripts because this script will show you everything that is

in the XML file and how it is read by gawk.

@load "xml"

# Set XMLMODE so that the XML parser reads strictly

# compliant XML data. Convert characters to Latin-1.

BEGIN { XMLMODE=1 ; XMLCHARSET = "ISO-8859-1" }

# Print an outline of nested tags and attributes.

XMLSTARTELEM {

printf("%*s%s", 2*XMLDEPTH-2, "", XMLSTARTELEM)

for (i=1; i<=NF; i++)

printf(" %s='%s'", $i, XMLATTR[$i])

print ""

}

# Upon closing tag, XMLPATH still holds the tag name.

XMLENDELEM { printf("%s %s\n", "XMLENDELEM", XMLPATH) }

# XMLEVENT holds the name of the current event.

XMLEVENT { print "XMLEVENT", XMLEVENT, XMLNAME, $0 }

# Character data will not be lost.

XMLCHARDATA { print "XMLCHARDATA", $0 }

# Processing instruction and comments instructions will be reported.

XMLPROCINST { print "XMLPROCINST", XMLPROCINST, $0 }

XMLCOMMENT { print "XMLCOMMENT", $0 }

# CDATA sections are used for quoting verbatim text.

XMLSTARTCDATA { print "XMLSTARTCDATA" }

# CDATA blocks have an end that is reported.

XMLENDCDATA { print "XMLENDCDATA" }

# The very first event holds the version info.

XMLDECLARATION {

version = XMLATTR["VERSION" ]

encoding = XMLATTR["ENCODING" ]

standalone = XMLATTR["STANDALONE"]

}

# DTDs, if present, are indicated as such.

XMLSTARTDOCT {

root = XMLSTARTDOCT

print "XMLATTR[PUBLIC]", XMLATTR["PUBLIC"]

print "XMLATTR[SYSTEM]", XMLATTR["SYSTEM"]

print "XMLATTR[INTERNAL_SUBSET]", XMLATTR["INTERNAL_SUBSET"]

}

# The end of a DTD is also indicated.

XMLENDDOCT { print "root", root }

# Unparsed text occurs rarely.

XMLUNPARSED { print "XMLUNPARSED", $0 }

# XMLENDDOCUMENT occurs only with XML data that is not

# strictly compliant to standards (multiple root elements).

XMLENDDOCUMENT { print "XMLENDDOCUMENT" }

# At the end of the file, you can check if an error occurred.

END { if (XMLERROR)

printf("XMLERROR '%s' at row %d col %d len %d\n",

XMLERROR, XMLROW, XMLCOL, XMLLEN)

}

Figure 3.7: The script demo_pusher.awk demonstrates all variables of gawk-xml

Next: DOM-like access with the xmltree library, Previous: XML Core Language Extensions of gawk, Up: General Introduction [Contents][Index]

4 Some Convenience with the xmllib library

All the variables that were added to the AWK language to allow for reading XML files show you one event at a time. If you want to rearrange data from several nodes, you have to collect the data during tree traversal. One example for this situation is the name of the parent node which is needed several time in the examples of Some Advanced Applications.

Stefan Tramm has written the xmllib library because he wanted

to simplify the use of gawk for command line usage (one-liners).

His library comes as an ordinary script file with AWK code and is

automatically included upon invocation of xmlgawk.

It introduces new variables for easy handling of character data

and tag nesting. Stefan contributed the library as well as the

xmlgawk wrapper script.

Next: Main features, Up: Some Convenience with the xmllib library [Contents][Index]

4.1 Introduction Examples

The most used AWK script is something like this:

$ awk '/matchrx/ { print $3, $1 } foo.dat

which assumes a line at a time approach and the division of a line (record) into words (fields), where only some fields are printed for records that match. With xmlgawk this does not change drastically, the approach is now one XML token at a time:

$ xmlgawk '/on-loan/ { grep() }' books.xml

which prints the complete XML subtree, where "on-loan" matches either characterdata, some part of a start- or endelement or some part of an attributname or -value. The function grep() provided in the xmllib.awk does all the magic for you. If you need a simple prettyprinter for an XML stream (because there are perhaps no new lines in the file), then you can use this:

$ xmlgawk 'SE { grep(4) }' books.xml

The number "4" gives the indention. The variable SE is set on every startelement, including the root element. This is an ideal command line idiom. Faster (in CPU time) xmlgawk solutions are possible, but whats the difference between 100msec or 1 second for a quick check? The second most anticipated usage is searching through parts of XML documents and printing the results in a nicer human readable form:

$ xmlgawk '

EE == "title" { t = CDATA }

EE == "author" { w = CDATA }

EE == "book" && ATTR[PATH"@publisher"] == "WROX" { print "author:", w, "title:", t }

' books.xml

This script memorizes every <author> and <title> and prints them only, when a <book> has the attribute "publisher" with the value "WROX". The variable EE is set with the name of an endelement, The variable PATH contains all ’open’ startelements before the current one in the document. The array ATTR contains all XML Attributes of every startelement in PATH. Here is a little example to make it clearer:

$ xmlgawk '

SE { print "SE", SE

print " PATH", PATH

print " CDATA", CDATA

XmlTraceAttr(PATH)

}

EE { print "EE", EE

print " PATH", PATH

print " CDATA", CDATA

}

' books.xml

SE books

PATH /books

CDATA

SE book

PATH /books/book

CDATA

ATTR[/books/book@on-loan]="Sanjay"

ATTR[/books/book@publisher]="IDG books"

SE title

PATH /books/book/title

CDATA

EE title

PATH /books/book/title

CDATA XML Bible

SE author

PATH /books/book/author

CDATA

EE author

PATH /books/book/author

CDATA Elliotte Rusty Harold

EE book

PATH /books/book

CDATA

The variable CDATA contains the character data right before the start- or endelement, which is very convenient in the above examples and in daily life.

Next: Usage of xmllib.awk, Previous: Introduction Examples, Up: Some Convenience with the xmllib library [Contents][Index]

4.2 Main features

The main ideas are:

- make character data available preceding start- and endelements

- provide the current path (parse stack, nesting level)

- make all startelement attributes of the complete path available

- provide helper functions for output

- provide help for grep-like tools

- provide debug support

The following sections are devoted to the above topics.

- Character Data (CDATA)

- Start- and End-elements (SE, EE, PATH, ATTR[])

- Comments (CM)

- Processing Instructions (PI)

- Real Character Data (XmlCDATA)

- grep function

- XmlStartElement and XmlEndElement functions

- XmlPathTail function

- XmlTraceAttr function

- Simple String manipulation functions

- Minor Issues

Character Data (CDATA)

The variable CDATA collects the characters of all XMLCHARDATA events. At an XMLSTARTELEM or XMLENDELEM event the CDATA variable is trimmed (by calling the function trim()), that means leading and trailing whitespace ([:space:]) characters are removed.

Please, keep in mind to use the idiom ’print quoteamp(CDATA)’ in your code, where the output is again XML or (X)HTML.

Start- and End-elements (SE, EE, PATH, ATTR[])

The variable SE has the same content and behaviour as XMLSTARTELEM, but it is much faster to type (EE does the same for XMLENDELEM).

The variable PATH contains all currently ’open’ startelements. It is like a parse stack and allows checks for the context of a current element. Elements are delimited by slashes "/". If PATH ist not empty, it begins with a "/".

The ATTR array stores every attribute of ’open’ startelements. This is sometimes very convenient, because you can simply ’look back’ for already seen attributes. Attributenames are separated by an at-sign "@" from its element path, eg:

/books/book@publisher

The helper function XmlTraceAttr prints all attributes for the specified path (if no path argument is given, the function defaults to PATH).

Comments (CM)

CM contains the trim-ed comment string in XMLCOMMENT, and $0 holds the completely reconstructed comment.

All comments in a character data section will be seen by the user program before the accumulated CDATA variable delivers the characters.

Processing Instructions (PI)

All processing instruction are available via PI (which has the same content as XMLPROCINST). $0 contains the completely reconstructed processing instruction.

The very first procinst is specially handled by expat and den XML core extension. xmllib.awk takes care of this and delivers the very first procinst as a normal procinst via PI.